Depuis de nombreuses années déjà, tous les smartphones se ressemblent, et se ressemblent de plus en plus : une dalle noire aux bords arrondis. C’est le dos du téléphone qui révèle sa marque et parfois son modèle à tous ceux qui vous font face. Leurs capacités photographiques restent d’ailleurs aujourd’hui le principal élément différentiant – car il est visible ? – des nouveaux modèles de chaque marque.

La photo, c'est du calcul

La présentation le 13 Octobre de l’iPhone 12 faisait ainsi la part belle à ses capacités photo et vidéo, et plus précisément à ce que permettait en la matière la puissance phénoménale des 12 milliards de transistors de la puce A14 «Bionic», qu’il s’agisse des cœurs de l’unité centrale (CPU), du processeur graphique (GPU), du sous-système dédié au traitement d’images (IPS), sans oublier le module d’intelligence artificielle (NLP).

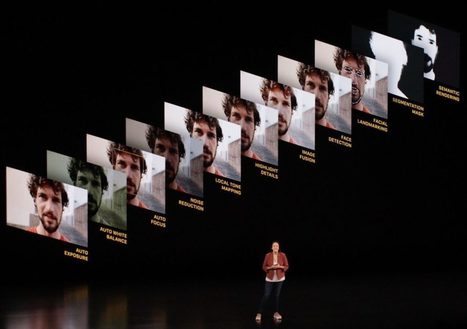

Comme si les optiques et les capteurs eux-mêmes, malgré des performances accrues – avec un système de stabilisation optique du capteur lui-même et non des lentilles -, passaient au second plan, éclipsées par une multitude de traitements opérant en temps réel et mobilisant les 3/4 du chipset pendant la prise de vues. Alors que la concurrence a vainement tenté de suivre Apple sur le nombre d’objectifs (seule manière d’assurer un zoom optique de qualité), puis de se différencier par la résolution en affichant des quantités de pixels toujours plus délirantes, la firme à la pomme s’en tient toujours à une résolution de 12 MP, et ce depuis l’iPhone X.

La différence est ailleurs, et réside dans la quantité ahurissante de traitements effectués avant, pendant, et après ce que vous croyez être une seule prise de vue : la vôtre. Apple a d’ailleurs reconnu cette évolution en utilisant le terme de «photographie computationnelle» de manière explicite durant l’événement. Il y avait pourtant un précédent.

Your new post is loading...

Your new post is loading...

La numérisation de l'appareil photo est achevée, place à la numérisation du photographe lui même